From online to offline

Since the wide release of ChatGPT in the winter of 2022, I've used LLM's constantly, at home and at work. I've used them for a wide range of purposes, from writing baudy limericks in the style of various famous people, to helping very large companies scale their supporting functions without having to increase manpower, and just about everything in between.

In this time, the hardest barrier I've hit is privacy, which practically restricts me from using any online LLM product for work. Whenever I make exceptions, I must rigorously ensure that no data I provide could even hint at the specifics of the actual work I am doing.

As a consultant, even if my company had an enterprise subscription to a product like ChatGPT that promised it didn't train or otherwise leak provided data, I would feel very reticent to use it with work/customer data, rendering its use marginally beneficial at best compared to how I already use the online products.

This is all why I've been following the local, offline LLM market with interest for some time. Like many, I've played with tools like Ollama and LangChain, but until recently I didn't feel like what I could get running on my work or home computers provided enough benefit to be worth the hassle of setting them up and the resource drain of running them.

This has all changed recently, thanks to a few developments:

- Purchasing a new MBP M4 with 16GB of RAM,1

- Switching over to a work MBP M1 with 16GB RAM,

- The release of Llama 3.2, which has a 3B model that easily runs locally and doesn't totally suck,

- My reinstalling Ollama while experimenting with a text processing home project,

- The release of Phi4, which is just about adequate for my purposes and runs reasonably well locally.

The Setup

Here's my current setup:

Prereqs

- Install Ollama,

- With Ollama, download an adequate model (currently, I'm trying out Phi4),

- Find an adequate UI for Ollama (I use Emacs + gptel),

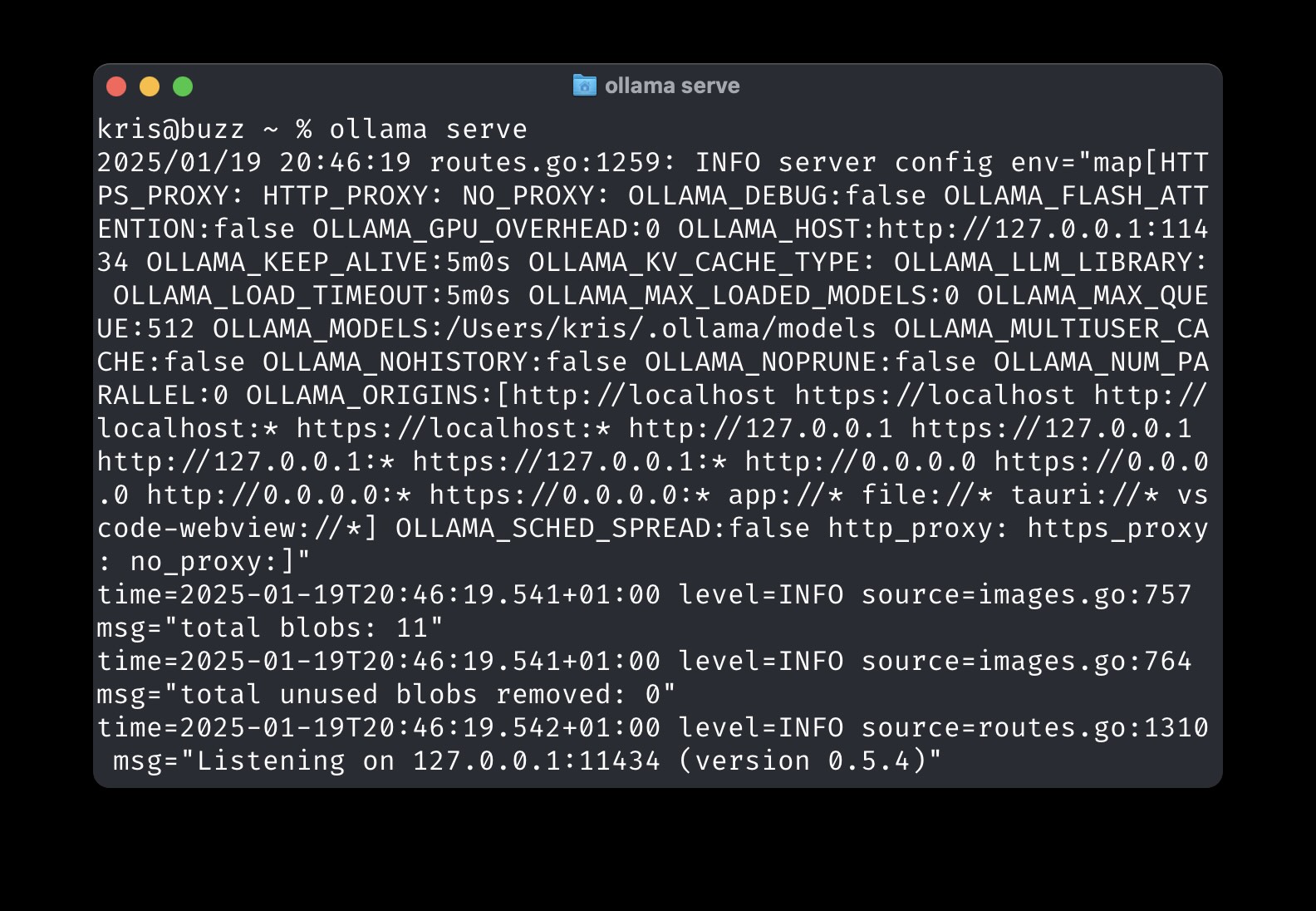

- In a terminal, start

ollama:

As I do all my work tracking & note-taking in Emacs (Org mode) anyways, having gptel to converse with me in-buffer gives such a smooth workflow.

The real slick benefit to this toolset is that if I want to provide context, I can easily select the relevant text and send it along with the prompt:

(see video examples from gptel until I can make a good screenclip)

Additionally, at home, I can just config gptel to use ChatGPT instead of one of the far weaker local models.

So far with Phi4 I've had success asking it to do many of the things I would typically go to ChatGPT for: reformatting & restructuring text, (simple) translations, and creative brainstorming.

I find Phi4, and the other 'small' language models I've used, to be godawful at providing facts and troubleshooting where in-depth information is required - obviously areas where the larger LLM's have a sizeable advantage. For example, recently I asked Phi4 to help debug a broken Excel formula, and while it did provide a correct answer, it buried it under several other long-winded irrelevant explanations of other hallucinated errors.2

So, mixed results - but good enough for now, desperate as I am to add a handy little GenAI tool to my toolbelt, especially at work. Over the coming months, I'm looking forward to improving my workflow, tuning my tools, and for more powerful models to be released!

-

I'm already wishing I opted for more RAM, but my budget was (relatively) strict, and I feared that Apple's pricing ladders would convince me to continue upgrading and shelling out money. ↩

-

Adding to this, I expect two issues with my use of Phi4: 1) its stated strong point is mathematics, which I have less use for at work, and 2) I haven't learned how to prompt a model like Phi4 optimally - to be honest I've struggled even finding any guidance or examples for Phi (4 or its predecessors) online. ↩